Hi,

about finding the total number of active devices: my entry point was luftdaten.info. The link from there goes to deutschland.maps.sensor.community, so usually you never see sensor.community page. On google are only old numbers (e.g. 3oo devices in 2017) to find. Btw, I can still not see the numbers there. Maybe some advertisement blocker I have running suppresses the output (“An error occurred”).

Why I opened this discussion: a lot of professional research has been done in the past years concerning low-cost dust monitoring systems. But I have the impression that unlike the extremely pleasing webinterface and quite a bit of effort taken to facilitate firmware installation, on sensor design and data quality this research seems to have had nearly no impact to the project at all. On the other side the network is growing, so the environmental footprint is rising. This project is a strong message to the politics, and maybe started political discurse about this topic much more than any collection of signatures or demonstrations would have done. This is absolutely great. But in the end, it’s only a “picture for the media” and that’s mostly it. The data behind it is not all the time realiable.

I agree that nobody would expect 100% precise results from a citizen network like this. But precise numbers are provided at the map, and most people will take only a short look, without digging deeply into the details, as a scientist would do. The website provides no help to the broad public to distinguish obviously false readings from those that are more reliable. You can click on each sensor to see the PM data short-term and long-term, but there is no direct overlay of humidity data. Also, it would be possible to introduce error bars, so data quality could be seen by a glimpse. The map also provides an AQI layer now, so it makes a statement about the healh risk in an area, but based on partially wrong data. It is something different to collect data for scientists that are able to identify Tegel airport approach lane even in foggy weather with this data, or to provide a health statement to the broad public.

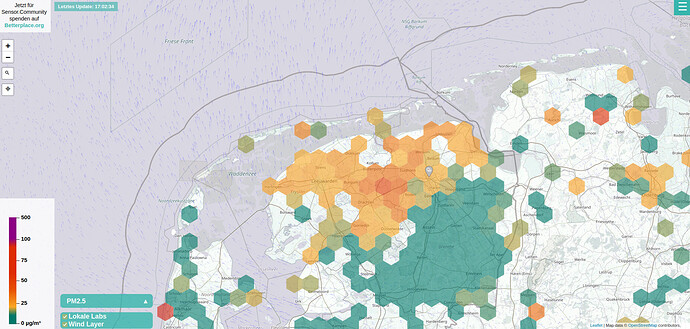

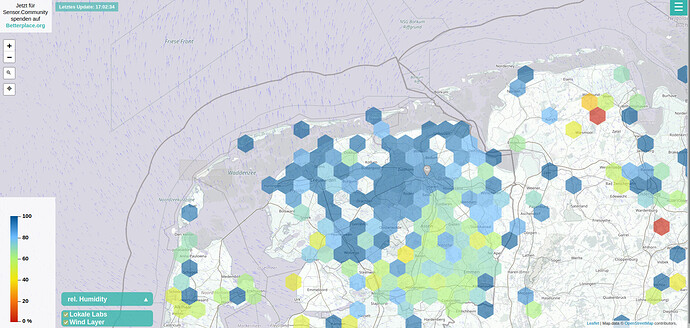

I took some screenshots yesterday from the Netherlands’ coast.

What you can see is that there is a whole region with high PM2.5 values. A lot of stations are yellow. But it is in a coastal area with a lot of wind, where barely no one is living. So where comes the fine dust from?

Switching to the humidity map, you can see that exactly in this area humidity is above 80%. Maybe it’s simply raining there.

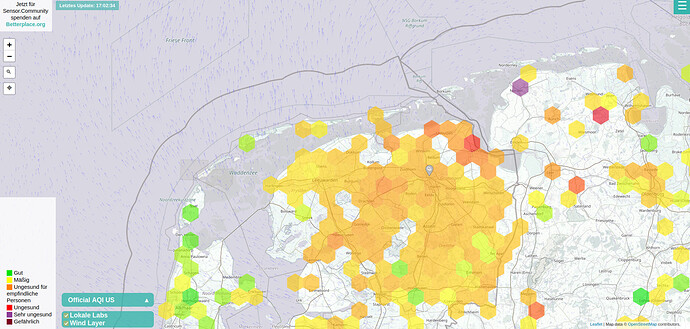

Then you switch to the AQI layer,

which shows it is unhealthy to be there for sensitive persons. So, the website makes a statement about a health effect that is based on absolute values that are wrong - maybe you have the cleanest air you can breathe in this region at the moment. For everybody who’s doing a quick check only without digging deeply into the details how everything works (which are 95% of the pages’ visitors, I assume), this can of course be frightening.

Maybe there are too many problems in this project to cope with for a proper quality management. Everybody can join in with any device whatsoever, from carefully-built with heat dryer, to completely-off and installed at the wrong place; with different sensor types from different batches, with brand-new or aged lasers, installed a few meters from the ground or at the top of a high building. But I don’t see any attempt to simply start dealing with it. E.g. one could start to contact all installations with DHT22 sensors and ask for replacement with another type. Or mark all humidity readings from stations with DHT22 as invalid. I miss a scientific approach, to identify inaccuracies and sources of errors and to deal with this. I miss at least an “official” statement to which extent false readings can be tolerated. Yes, if you have a single station that is off, it is no problem to indentify. But if a whole region is off due to the weather? You don’t know what is inside of each water droplet without drying it. PM10? PM2.5? Or simply nothing, only water? Like at the Netherlands coast yesterday?

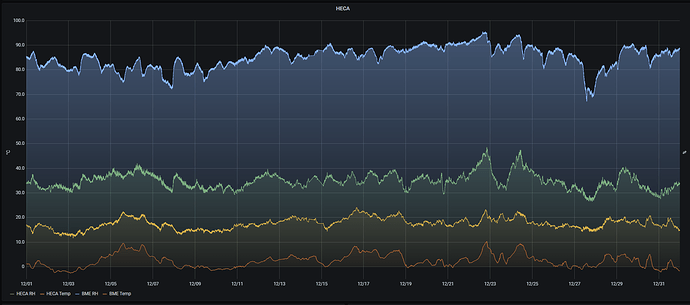

I have bought my hardware some years ago, right after the project started, but after reading the first research papers decided not to set it up due to not-yet overcome data quality and security issues. Some weeks ago I found it in a cardbox and did a research on luftdaten.info, finding papers that mathematical compensation with the help of the humidity sensor is possible. I assumed this has already been implemented, so I installed the sensor and registered it. But then I saw a huge influence of humidity on my data and spent some hours to find out if mathematical compensation has been implemented or not. It took me quite a while also to find the github repository with the source code. Deeply hidden in a forums post I found the statement that compensation algorithms have not been possible to implement.

On https://pudi.lubw.de/detailseite/-/publication/90536 [p.17] you can find that the SDS011 can overestimate PM10 more than 500%. On the same paper on p. 15 is shown that increasing the length of the pipe from 20cm to 100cm is reduces the airflow to 62% - with massive impact on the registered amount of particles of course. So, that’s quite a bit!

The more the sensors network grows, the bigger becomes the environmental impact. It’s often not into focus that electricity comes not from a wall outlet and internet data not from a magic cloud, but needs a very power consuming infrastructure in behind. According to https://www.watson.ch/digital/wissen/852678578-4-gramm-co-jedes-mail-das-du-verschickst-waermt-die-erde-auf , sending a single small email emits 4g CO2. If we say an airrohr datagram is similar to a small email, and we have 24 datagrams an hour, this is 840 kg for each station or 12.614 tons for all 15.000 stations per year. Even if it’s only 0.4g CO2 per datagram (because there is less data storage), it’s still quite a bit. Also, the production of the hardware consumes quite a bit of resources. 15.000 sensors that have to be replaced every 5-6 years?

And so, there is a question: how much resources do we want to spend for only a “picture for the media”? Or for reliable data, so a comparison with the one taken from the governments can be made? What can (and has to) be a “way in the middle”? Everything can stay like it is, thus creating only a (wrong) “picture” for the broad public during the time of the year when finedust is really a matter, and produce hundreds of tons of CO2 for it. Or produce the CO2 for creating data where at least an easy estimation of data quality is possible for everybody, even if data is wrong. Or spend it not at all during the time when data quality can not be ensured, by switching off the stations.

How valuable is this inaccurate data? For the public? For scientists? What is done with the research results of the scientists that use the inaccurate data? One can identify Tegel airport approach lane also on a dry summer day, why should one take unreliable data from a high-humidity period? Maybe it’s interesting to identify fine dust in the approach lane, not only condensed water from the engines or from some thermal effects on the wings?

NAM (https://air.nettigo.pl/baza-wiedzy/nam-033-std-fa/?setlang=en) looks quite interesting.

There has been a comparison made to certified national stations? I did not find anything about it on the web page. How has it been done? Under which different climatic conditions?

It´s an interesting approach to heat up the air during the time between the measurements, then measure the heated air. But then a precise timing is needed, as measurement has to be done not before and not after the warm air arrives and measurement time can only be very short, as the first air passing the tube will cool down immediately. You can not heat up the whole housing on cold days with only 1W to prevent this. Also, when the air is heated for 2 minutes in the chamber, this might influence the composition of the fine dust (partices may settle down or stick on the walls), as there is no air movement during this time. If there is wind outside, it may swap the air in the chamber several times during heat-up, so no reliable heating may be possible.

Also, the total consumed energy does not depend on the time but on the heated air volume, and there especially of the water content and if hygroscopic salts are dissolved into the water. You can heat it up for 1 second with 100W or for 100 seconds with 1W. Important may be thermal insulation from the environment, so the heat is kept inside the heater and not wasted.

The PTC is silver, thus it’s hard for him to emit the heat into the air, while the surrounding tube is black, thus absorbing heat easily - both heat from the outside sunlight and the PTC. The case is not naturally ventilated, so it will always have a micro climate inside, as will be in the humidity/temperature sensor, as radiation shielding is quite basic.

What I can also see, the humidity sensor is outside the case, so there is no feedback about humidity in the air stream at all, thus the PTC can not been regulated to get a continuous rH level or even know the humidity content in the air stream. Did you make your research with a second sensor at the fan exhaust?

There came a new paper “on the market” last month dealing with air preheat. They use a 10W heater with a 50cm long insulated brass tube, bringing humidity down to 35% and measuring directly inside the measurement chamber of the sensor to provide a feedback on the heating element:

https://www.researchgate.net/publication/351245900_Comparison_of_a_Computational_Method_for_Correcting_the_Humidity_Influence_with_the_Use_of_a_Low-Cost_Aerosol_Dryer_on_a_SDS011_Low-Cost_PM-Sensor

The results are pretty good but power consumption high and construction may be too hard for the basic amateur.

Cheers,

Andreas